Our Verdict

The Vega 56 should be the price/performance hero of AMD's top GPU architecture, but it's pricing itself out of the running, with gaming frame rates well short of the competition.

The AMD Radeon RX Vega 56 was the second-tier option in the 14nm Vega family and that would have normally made it our price/performance favourite… but the Vega 56 was always onto a bit of a hiding to nothing. Team Radeon’s Sith-inspired rule-of-two chip design means every GPU it creates eventually gets released as a pair of gaming graphics cards. So, following on from the AMD RX Vega 64, came the lower-spec RX Vega 56, struggling to keep up with a GTX 1070 and beaten bloody by Nvidia’s GTX 1070 Ti. Poor lamb.

And now, with the release of the GTX 1660 Ti cards, Nvidia has created a new lower tier of Turing GPUs that can both undercut AMD’s Vega GPUs in price and now in performance terms too. The GTX 1660 Ti is a card that’s knocking around the $300 mark and, even though the RX Vega 56 is now available for around that price, it mostly loses out to the latest GeForce GPUs.

Much of AMD’s Vega struggles can be traced back to the choice of HBM2 as the memory technology to be used across the professional and consumer variants of the Vega design.

If AMD had simply opted for the traditional GDDR5/X memory Vega would have been here a lot sooner, and, importantly, a lot cheaper. Unfortunately it seems changing the memory compatibility is a toughie or we might have seen lower-spec cards.

Bless the ol’ AMD Vega architecture, it’s had a bit of a hard time. Since it was first announced, in hushed whispers, as the first high-end Radeon GPU in an age, it was hailed both as the saviour of AMD’s graphics division and the architecture that might damn it. In all honestly, it’s neither.

The lack of stock and confusion over pricing initially left a bit of nasty taste in the mouth, with accusations flying around about the original RX Vega 56’s $399 price given out to reviewers being only a short-term deal. And now it’s as cheap as $277 (£276), but the post crypto mining price cuts are just too little and far too late.

AMD Radeon RX Vega 56 specs

There’s not a huge difference between the GPUs at the heart of the top-end RX Vega 64 and this lower-caste RX Vega 56. As the name suggests, the second-tier card has 56 compute units (CUs) compared with the 64 CU count of the higher-spec card. That means AMD has jammed 3,584 GCN cores into the RX Vega 56 instead of the previous card’s 4,096 GCN cores.

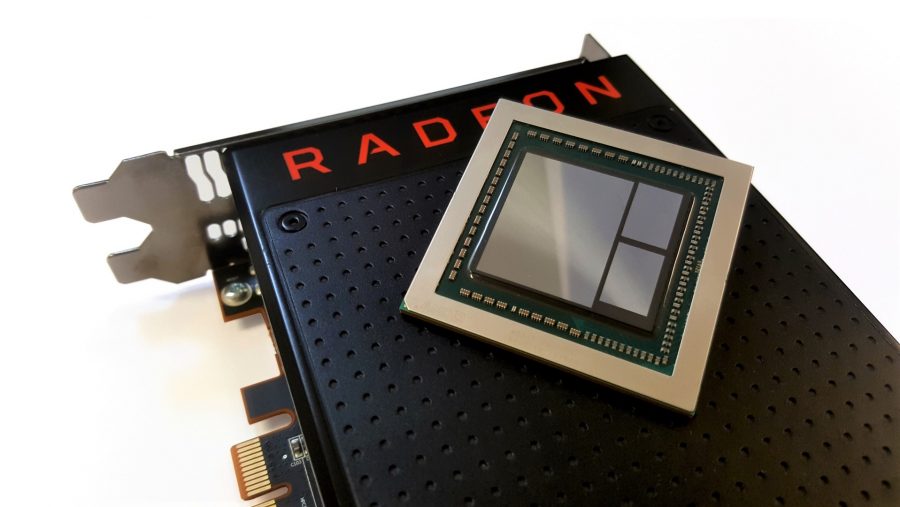

Other than that it’s purely a case of some tweaked power settings and lower clockspeeds – everything else is identical, down to the cooling design. That means we’re still talking about 8GB of HBM2 video memory and a 2,048-bit bus. It’s the same on-die configuration that makes the Vega 10 GPU a 486mm2 mammoth (the GP102 used in the GTX 1080 Ti is just 314mm2 for comparison) with 12.5bn transistors inside it.

| RX Vega 64 | RX Vega 56 | RX 580 | GTX 1080 Ti | |

| GPU | AMD Vega 10 | AMD Vega 10 | AMD Polaris 20 | Nvidia GP102 |

| Architecture | GCN 4.0 | GCN 4.0 | GCN 4.0 | Pascal |

| Lithography | 14nm FinFET | 14nm FinFET | 14nm FinFET | 16nm FinFET |

| Transistors | 12.5bn | 12.5bn | 5.7bn | 7.2bn |

| Die size | 486mm2 | 486mm2 | 232mm2 | 314mm2 |

| Base clockspeed | 1,247MHz | 1,156MHz | 1,257MHz | 1,480MHz |

| Boost clockspeed | 1,546MHz | 1,471MHz | 1,340MHz | 1,645MHz |

| Stream Processors | 4,096 | 3,584 | 2,304 | 3,584 |

| Texture units | 256 | 256 | 144 | 224 |

| Memory Capacity | 8GB HBM2 | 8GB HBM2 | 8GB GDDR5 | 11GB GDDR5X |

| Memory bus | 2,048-bit | 2,048-bit | 256-bit | 352-bit |

| TDP | 295W | 210W | 185W | 250W |

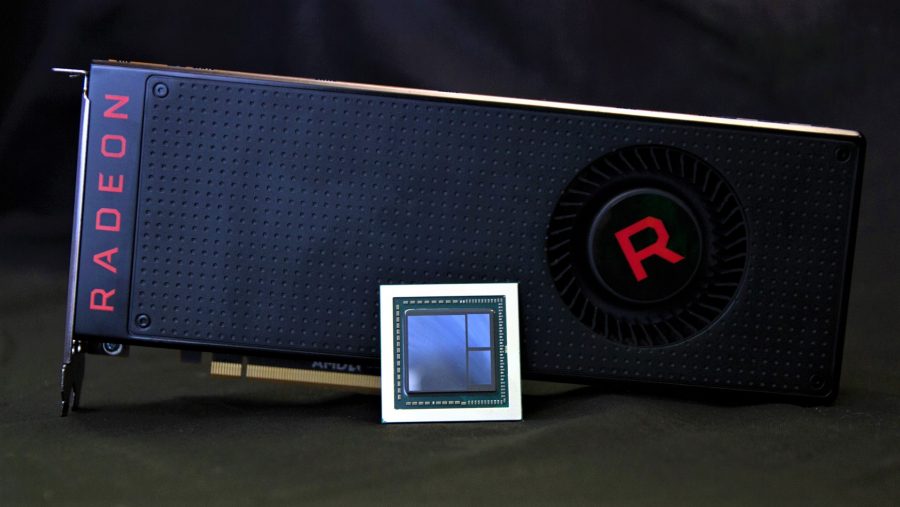

There’s no liquid-chilled version of the RX Vega 56, nor are there any super-sexeh Silver Shroud variants. From the looks of things, AMD only made maybe five of either anyway, and I think those just went out to friends and family…

In terms of the actual Vega GPU architecture, we’ve gone into detail about what AMD’s engineers have been trying to create in our RX Vega 64 review. Essentially, they’re calling it their broadest architectural update in five years which is when AMD unleashed the original Graphics Core Next (GCN) design – and it brings with it some interesting new features.

One of which is the Infinity Fabric interconnect, binding the GPU with all the other components in the chip, making it a more modular architecture than previous generations. It’s also the key factor in making multi-GPU packages in the future. There’s also the new compute unit and AMD’s high-bandwidth cache controller (HBCC) too, offering the promise of the tech improving as more developers start taking advantage of it.

AMD Radeon RX Vega 56 benchmarks

PCGamesN Test Rig: Intel Core i7 8700K, Asus ROG Strix Z370-F Gaming, 16GB Crucial Ballistix DDR4, Corsair HX1200i,

Philips BDM3275

AMD Radeon RX Vega 56 performance

The big challenge for the RX Vega 56 – outside of trying to magic up affordable stock for people to actually buy – is to push past the similarly priced Nvidia competition. Just as the RX Vega 64 was supposedly priced to go head-to-head with the GTX 1080, the RX Vega 56 was released into the wild with the GTX 1070 in its sights. Unfortunately Nvidia hit back with the GTX 1070 Ti and more recently the GTX 1660 Ti.

Like the RX Vega 64’s attempts to overthrow the GTX 1080, the Vega 56 struggles against its targets. As we said about the flagship Vega GPU, it’s an architecture which seems to have been primarily designed for a gaming future that’s yet to have been created. As such, it’s rather lacklustre in its legacy gaming performance.

With games built using the last-gen DirectX 11 API the second class Vega is left trailing in the wake of the GTX 1070, and that means it’s off the pace against the latest GTX Turing cards too. But when you start to bring in tests based around the newer DirectX 12, or Vulkan, instruction sets, Vega’s modern architectural design allows it to occasionally take the lead.

Unfortunately, despite DX11 now being very much a legacy API, with DX12 being over a year old, the majority of PC games are still being released using the older system. And that means for the majority of PC games that come out over the next six months, at least, the GTX 1660 Ti is likely to retain that performance advantage.

Granted, Vega’s performance improvements in DX12 and Vulkan are encouraging, if they ever become the dominant APIs, but right now anyone lucky enough to be able to find the RX Vega 56 for a decent price is still going to be paying the same money for a card that struggles to beat a smaller, more efficient, GPU in pretty much every game that’s in their Steam library.

That’s not the only competition for the RX Vega 56, however, as there’s also the small matter of fratricide. As is its wont, despite the $100 price difference in their relative SEP, AMD hasn’t made sweeping changes to the core configuration of the two Vega variants. Essentially, the RX Vega 56 is simply operating with 12.5% fewer cores, yet in performance terms is only ever around 7-10% slower.

Yeah, Vega’s weird.

AMD Radeon RX Vega 56 verdict

The overall design of the Vega architecture seems to have been about laying a marker in the sand, defining a branching point for future generations of AMD’s GPU technology. It’s sacrificed legacy gaming performance for the promise of future applications of its smart-looking, though largely unused, feature set. The little extras the Vega architecture has baked into it look like they could have been genuinely game-changing… but only in some alternative timeline that never came to pass.

If it was guaranteed that the HBCC and Rapid Packed Math shenanigans were going to be employed across the board, and not just by AMD best-buds Bethesda, then the Radeon tech would definitely have been the one to go for over the old-school Nvidia design.

Wolfenstein II: The New Colossus has shown some impressive Vega performance in the face of the GTX 1080 competition, with AMD’s traditional Vulkan speed-wins in the low-level API. But Vega is showing greater gains than the Polaris architecture, which would indicate there is something to the Rapid Packed Math stuff.

If you were spending around $300 on a graphics card today it would still be difficult to make the Radeon recommendation. It’s arguably the more advanced architecture, but in raw performance terms the smaller, slightly cheaper, more efficient Nvidia GTX Turing GPU is likely to get you higher frame rates in more of the games you’re playing at the moment.

AMD and its partners are pricing Vega as aggressively as it can this late in its life, but unfortunately the price of HBM2 is a big sticking point. Luckily the end is nigh for Vega, forget the 7nm Radeon VII, we’ve got the new AMD Navi architecture coming before the end of September, and that’s promising serious mainstream graphics card competition again.

Still, I liked what AMD was trying to do with Vega, but sacrificing current performance for the vague chance of higher frame rates in an alternative future was just too much of an ask for most of us PC gamers.